Create step-wise / incremental tests in pytest.

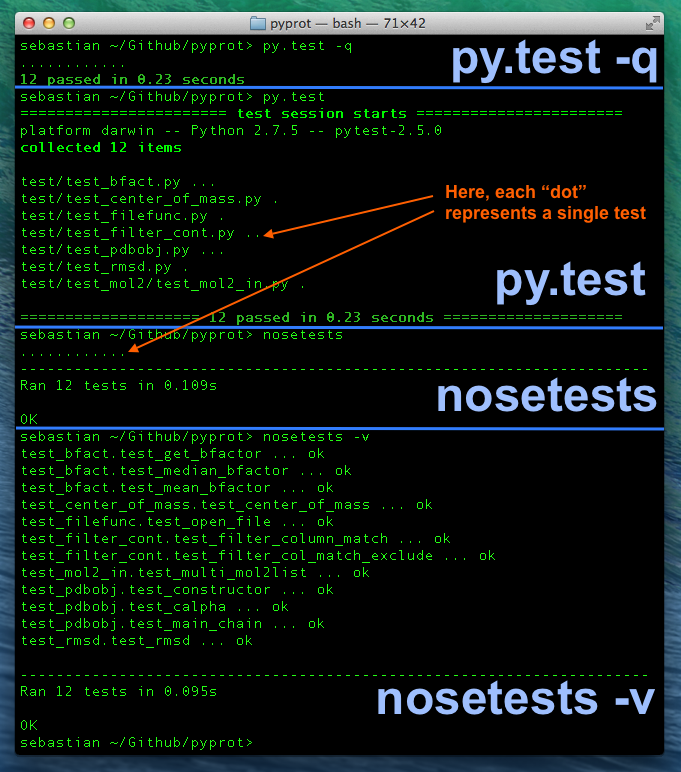

Pytest is a popular Python testing framework, primarily used for unit testing. It is open-source and the project is hosted on GitHub. Pytest framework can be used to write simple unit tests as well as complex functional tests. It eases the development of writing scalable tests in Python. Pytest Introduction. Pytest can be used for all types and levels of software testing. Many projects – amongst them Mozilla and Dropbox - switched from unittest or nose to pytest.

Manual execution of tests has slightly changed in 1.7.0, see explanations here

New pytest-harvest compatibility fixtures, check them out !

- In the question'What are the best Python unit testing frameworks?' pytest is ranked 1st while unittest is ranked 3rd. The most important reason people chose pytest is: The idioms that pytest first introduced brought a change in the Python community because they made it possible for test suites to be written in a very compact style, or at.

- The pytest framework makes it easy to write small tests, yet scales to support complex functional testing for applications and libraries. By data scientists, for data scientists ANACONDA.

- Pytest is a testing framework based on python. It is mainly used to write API test cases. This tutorial helps you understand −. Installation of pytest. Various concepts and features of pytest. Sample programs. By the end of this tutorial, you should be able to start writing test cases using pytest.

Did you ever want to organize your test in incremental steps, for example to improve readability in case of failure ? Or to have some optional steps, executing only conditionally to previous steps' results?

pytest-steps leverages pytest and its great @pytest.mark.parametrize and @pytest.fixture decorators, so that you can create incremental tests with steps without having to think about the pytest fixture/parametrize pattern that has to be implemented for your particular case.

This is particularly helpful if:

- you wish to share a state / intermediate results across test steps

- your tests already rely on other fixtures and/or parameters, such as with pytest-cases. In that case, finding the correct pytest design, that will ensure that you have a brand new state object at the beginning of each test suite while ensuring that this object will actually be shared across all steps, might be very tricky.

With pytest-steps you don't have to care about the internals: it just works as expected.

Note

pytest-steps has not yet been tested with pytest-xdist. See #7

Installing¶

1. Usage - 'generator' mode¶

This new mode may seem more natural and readable to non-pytest experts. However it may be harder to debug when used in combination with other pytest tricks. In such case, do not hesitate to switch to good old 'explicit' mode. Drupal 9.1.

a- Basics¶

Start with you favorite test function. There are two things to do, to break it down into steps:

- decorate it with

@test_stepsto declare what are the steps that will be performed, as strings. - insert as many

yieldstatements in your function body as there are steps. The function should end with ayield(notreturn!).

!!! note Code written after the last yield will not be executed.

For example we define three steps:

That's it! If you run pytest you will now see 3 tests instead of one:

Rusalka. Debugging note

You might wish to use yield instead of yield at the end of each step when debugging if you think that there is an issue with the execution order. This will activate a built-in checker, that will check that each step name in the declared sequence corresponds to what you actually yield at the end of that step.

b- Shared data¶

By design, all intermediate results created during function execution are shared between steps, since they are part of the same python function call. You therefore have nothing to do: this is what is shown above in step c where we reuse intermediate_a from step a.

c- Optional steps and dependencies¶

In this generator mode, all steps depend on all previous steps by default: if a step fails, all subsequent steps will be skipped. To circumvent this behaviour you can declare a step as optional. This means that subsequent steps will not depend on it except explicitly stated. For this you should:

- wrap the step into the special

optional_stepcontext manager, yieldthe corresponding context object at the end of the step, instead ofNoneor the step name. This is very important, otherwise the step will be considered as successful by pytest!

For example: Lastpass forgot master password.

If steps depend on an optional step in order to execute, you should make them optional too, and state it explicitly:

- declare the dependency using the

depends_onargument. - use

should_run()to test if the code block should be executed.

The example below shows 4 steps, where steps a and d are mandatory and b and c are optional with c dependent on b:

Running it with pytest shows the desired behaviour: step b fails but does not prevent step d to execute correctly. step c is marked as skipped because its dependency (step b) failed.

d- Calling decorated functions manually¶

In some cases you might wish to call your test functions manually before the tests actually run. This can be very useful when you do not wish the package import times to be counted in test execution durations - typically in a 'benchmarking' use case such as shown here.

It is now possible to call a test function decorated with @test_steps manually. For this the best way to understand what you have to provide is to inspect it.

yields

So we have to provide two arguments: ________step_name_ and request. Note: the same information can be obtained in a more formal way using signature from the inspect (latest python) or funcsigs (older) packages.

Once you know what arguments you have to provide, there are two rules to follow in order to execute the function manually:

- replace the

step_nameargument with which steps you wish to execute:Noneto execute all steps in order, or a list of steps to execute some steps only. Note that in generator mode, 'by design' (generator function) it is only possible to call the steps in correct order and starting from the first one, but you can provide a partial list: - replace the

requestargument withNone, to indicate that you are executing outside of any pytest context.

arguments order changed in 1.7.0

Unfortunately the order of arguments for manual execution changed in version 1.7.0. This was the only way to add support for class methods. Apologies !

e- Compliance with pytest¶

parameters¶

Under the hood, the @test_steps decorator simply generates a wrapper function around your function and mark it with @pytest.mark.parametrize. The function wrapper is created using the excellent decorator library, so all marks that exist on it are kept in the process, as well as its name and signature.

Therefore @test_stepsshould be compliant with all native pytest mechanisms. For exemple you can use decorators such as @pytest.mark.parametrize before or after it in the function decoration order (depending on your desired resulting test order):

If you execute it, it correctly executes all the steps for each parameter value:

fixtures¶

You can also use fixtures as usual, but special care has to be taken about function-scope fixtures. Let's consider the following example:

Here, and that can be a bit misleading,

pytestwill callmy_fixture()twice, because there are two pytest function executions, one for each step. So we think that everything is good..- ..however the second fixture instance is never be passed to our test code: instead, the

my_fixtureinstance that was passed as argument in the first step will be used by all steps. Therefore we end up having a failure in the test furing step b.

It is possible to circumvent this behaviour by declaring explicitly what you expect:

- if you would like to share fixture instances across steps, decorate your fixture with

@cross_steps_fixture. - if you would like each step to have its own fixture instance, decorate your fixture with

@one_fixture_per_step.

For example

Each step will now use its own fixture instance and the test will succeed (instance 2 will be available at step b).

When a fixture is decorated with @one_fixture_per_step, the object that is injected in your test function is a transparent proxy of the fixture, so it behaves exactly like the fixture. If for some reason you want to get the 'true' inner wrapped object, you can do so using get_underlying_fixture(my_fixture).

2. Usage - 'explicit' mode¶

In 'explicit' mode, things are a bit more complex to write but can be easier to understand because it does not use generators, just simple function calls.

a- Basics¶

Like for the other mode, simply decorate your test function with @test_steps and declare what are the steps that will be performed. In addition, put a test_step parameter in your function, that will receive the current step.

The easiest way to use it is to declare each step as a function:

Note: as shown above, you can perform some reasoning about the step at hand in test_suite_1, by looking at the test_step object.

Custom parameter name

You might want another name than test_step to receive the current step. The test_step_argname argument can be used to change that name.

Python Pytest Patch

Variants: other types¶

This mechanism is actually nothing more than a pytest parameter so it has to requirement on the test_step type. It is therefore possible to use other types, for example to declare the test steps as strings instead of function:

This has pros and cons:

- (+) you can declare the test suite before the step functions in the python file (better readability !)

- (-) you can not use

@depends_onto decorate your step functions: you can only rely on shared data container to create dependencies (as explained below)

b- Auto-skip/fail¶

Python Pytest Coverage

In this explicit mode all steps are optional/independent by default: each of them will be run, whatever the execution result of others. If you wish to change this, you can use the @depends_on decorator to mark a step as to be automatically skipped or failed if some other steps did not run successfully.

For example:

That way, step_b will now be skipped if step_a does not run successfully.

Note that if you use shared data (see below), you can perform similar, and also more advanced dependency checks, by checking the contents of the shared data and calling pytest.skip() or pytest.fail() according to what is present. See step_b in the example below for an illustration.

Warning

The @depends_on decorator is only effective if the decorated step function is used 'as is' as an argument in @test_steps(). If a non-direct relation is used, such as using the test step name as argument, you should use a shared data container (see below) to manually create the dependency.

c- Shared data¶

In this explicit mode, by default all steps are independent, therefore they do not have access to each other's execution results. To solve this problem, you can add a steps_data argument to your test function. If you do so, a StepsDataHolder object will be injected in this variable, that you can use to store and retrieve results. Simply create fields on it and store whatever you like:

d- Calling decorated functions manually¶

In 'explicit' mode it is possible to call your test functions outside of pytest runners, exactly the same way we saw in generator mode.

An exemple can be found here.

e- Compliance with pytest¶

You can add as many @pytest.mark.parametrize and pytest fixtures in your test suite function, it should work as expected: a newsteps_data object will be created everytime a new parameter/fixture combination is created, and that object will be shared across steps with the same parameters and fixtures.

Concerning fixtures,

- by default all function-scoped fixtures will be 'one per step' in this mode (you do not even need to use the

@one_fixture_per_stepdecorator - although it does not hurt). - if you wish a fixture to be shared across several steps, decorate it with

@cross_steps_fixture.

For example

3. Usage with pytest-harvest¶

a- Enhancing the results df¶

You might already use pytest-harvest to turn your tests into functional benchmarks. When you combine it with pytest_steps you end up with one row in the synthesis table per step. For example:

| test_id | status | duration_ms | _step_name | algo_param | dataset | accuracy |

|---|---|---|---|---|---|---|

| test_my_app_bench[A-1-train] | passed | 2.00009 | train | 1 | my dataset #A | 0.832642 |

| test_my_app_bench[A-1-score] | passed | 0 | score | 1 | my dataset #A | nan |

| test_my_app_bench[A-2-train] | passed | 1.00017 | train | 2 | my dataset #A | 0.0638134 |

| test_my_app_bench[A-2-score] | passed | 0.999928 | score | 2 | my dataset #A | nan |

| test_my_app_bench[B-1-train] | passed | 0 | train | 1 | my dataset #B | 0.870705 |

| test_my_app_bench[B-1-score] | passed | 0 | score | 1 | my dataset #B | nan |

| test_my_app_bench[B-2-train] | passed | 0 | train | 2 | my dataset #B | 0.764746 |

| test_my_app_bench[B-2-score] | passed | 1.0004 | score | 2 | my dataset #B | nan |

You might wish to use the provided handle_steps_in_results_df utility method to replace the index with a 2-level multiindex (test id without step, step id).

b- Pivoting the results df¶

If you prefer to see one row per test and the step details in columns, this package also provides NEW default [module/session]_results_df_steps_pivoted fixtures to directly get the pivoted version ; and a pivot_steps_on_df utility method to perform the pivot transform easily.

You will for example obtain this kind of pivoted table:

| test_id | algo_param | dataset | train/status | train/duration_ms | train/accuracy | score/status | score/duration_ms |

|---|---|---|---|---|---|---|---|

| test_my_app_bench[A-1] | 1 | my dataset #A | passed | 2.00009 | 0.832642 | passed | 0 |

| test_my_app_bench[A-2] | 2 | my dataset #A | passed | 1.00017 | 0.0638134 | passed | 0.999928 |

| test_my_app_bench[B-1] | 1 | my dataset #B | passed | 0 | 0.870705 | passed | 0 |

| test_my_app_bench[B-2] | 2 | my dataset #B | passed | 0 | 0.764746 | passed | 1.0004 |

c- Examples¶

Two examples are available that should be quite straightforward for those familiar with pytest-harvest:

- here an example relying on default fixtures, to show how simple it is to satisfy the most common use cases.

- here an advanced example where the custom synthesis is created manually from the dictionary provided by pytest-harvest, thanks to helper methods.

Main features / benefits¶

- Split tests into steps. Although the best practices in testing are very much in favor of having each test completely independent of the other ones (for example for distributed execution), there is definitely some value in results readability to break down tests into chained sub-tests (steps). The

@test_stepsdecorator provides an intuitive way to do that without forcing any data model (steps can be functions, objects, etc.). - Multi-style: an explicit mode and a generator mode are supported, developers may wish to use one or the other depending on their coding style or readability target.

- Steps can share data- In generator mode this is out-of-the-box. In explicit mode all steps in the same test suite can share data through the injected

steps_datacontainer (name is configurable). - Steps dependencies can be defined: a

@depends_ondecorator (explicit mode) or anoptional_stepcontext manager (generator mode) allow you to specify that a given test step should be skipped or failed if its dependencies did not complete.

See Also¶

- pytest-cases, to go further and separate test data from test functions

Others¶

Python Pytest Selenium Clear Ie Cache

b- Shared data¶

By design, all intermediate results created during function execution are shared between steps, since they are part of the same python function call. You therefore have nothing to do: this is what is shown above in step c where we reuse intermediate_a from step a.

c- Optional steps and dependencies¶

In this generator mode, all steps depend on all previous steps by default: if a step fails, all subsequent steps will be skipped. To circumvent this behaviour you can declare a step as optional. This means that subsequent steps will not depend on it except explicitly stated. For this you should:

- wrap the step into the special

optional_stepcontext manager, yieldthe corresponding context object at the end of the step, instead ofNoneor the step name. This is very important, otherwise the step will be considered as successful by pytest!

For example: Lastpass forgot master password.

If steps depend on an optional step in order to execute, you should make them optional too, and state it explicitly:

- declare the dependency using the

depends_onargument. - use

should_run()to test if the code block should be executed.

The example below shows 4 steps, where steps a and d are mandatory and b and c are optional with c dependent on b:

Running it with pytest shows the desired behaviour: step b fails but does not prevent step d to execute correctly. step c is marked as skipped because its dependency (step b) failed.

d- Calling decorated functions manually¶

In some cases you might wish to call your test functions manually before the tests actually run. This can be very useful when you do not wish the package import times to be counted in test execution durations - typically in a 'benchmarking' use case such as shown here.

It is now possible to call a test function decorated with @test_steps manually. For this the best way to understand what you have to provide is to inspect it.

yields

So we have to provide two arguments: ________step_name_ and request. Note: the same information can be obtained in a more formal way using signature from the inspect (latest python) or funcsigs (older) packages.

Once you know what arguments you have to provide, there are two rules to follow in order to execute the function manually:

- replace the

step_nameargument with which steps you wish to execute:Noneto execute all steps in order, or a list of steps to execute some steps only. Note that in generator mode, 'by design' (generator function) it is only possible to call the steps in correct order and starting from the first one, but you can provide a partial list: - replace the

requestargument withNone, to indicate that you are executing outside of any pytest context.

arguments order changed in 1.7.0

Unfortunately the order of arguments for manual execution changed in version 1.7.0. This was the only way to add support for class methods. Apologies !

e- Compliance with pytest¶

parameters¶

Under the hood, the @test_steps decorator simply generates a wrapper function around your function and mark it with @pytest.mark.parametrize. The function wrapper is created using the excellent decorator library, so all marks that exist on it are kept in the process, as well as its name and signature.

Therefore @test_stepsshould be compliant with all native pytest mechanisms. For exemple you can use decorators such as @pytest.mark.parametrize before or after it in the function decoration order (depending on your desired resulting test order):

If you execute it, it correctly executes all the steps for each parameter value:

fixtures¶

You can also use fixtures as usual, but special care has to be taken about function-scope fixtures. Let's consider the following example:

Here, and that can be a bit misleading,

pytestwill callmy_fixture()twice, because there are two pytest function executions, one for each step. So we think that everything is good..- ..however the second fixture instance is never be passed to our test code: instead, the

my_fixtureinstance that was passed as argument in the first step will be used by all steps. Therefore we end up having a failure in the test furing step b.

It is possible to circumvent this behaviour by declaring explicitly what you expect:

- if you would like to share fixture instances across steps, decorate your fixture with

@cross_steps_fixture. - if you would like each step to have its own fixture instance, decorate your fixture with

@one_fixture_per_step.

For example

Each step will now use its own fixture instance and the test will succeed (instance 2 will be available at step b).

When a fixture is decorated with @one_fixture_per_step, the object that is injected in your test function is a transparent proxy of the fixture, so it behaves exactly like the fixture. If for some reason you want to get the 'true' inner wrapped object, you can do so using get_underlying_fixture(my_fixture).

2. Usage - 'explicit' mode¶

In 'explicit' mode, things are a bit more complex to write but can be easier to understand because it does not use generators, just simple function calls.

a- Basics¶

Like for the other mode, simply decorate your test function with @test_steps and declare what are the steps that will be performed. In addition, put a test_step parameter in your function, that will receive the current step.

The easiest way to use it is to declare each step as a function:

Note: as shown above, you can perform some reasoning about the step at hand in test_suite_1, by looking at the test_step object.

Custom parameter name

You might want another name than test_step to receive the current step. The test_step_argname argument can be used to change that name.

Python Pytest Patch

Variants: other types¶

This mechanism is actually nothing more than a pytest parameter so it has to requirement on the test_step type. It is therefore possible to use other types, for example to declare the test steps as strings instead of function:

This has pros and cons:

- (+) you can declare the test suite before the step functions in the python file (better readability !)

- (-) you can not use

@depends_onto decorate your step functions: you can only rely on shared data container to create dependencies (as explained below)

b- Auto-skip/fail¶

Python Pytest Coverage

In this explicit mode all steps are optional/independent by default: each of them will be run, whatever the execution result of others. If you wish to change this, you can use the @depends_on decorator to mark a step as to be automatically skipped or failed if some other steps did not run successfully.

For example:

That way, step_b will now be skipped if step_a does not run successfully.

Note that if you use shared data (see below), you can perform similar, and also more advanced dependency checks, by checking the contents of the shared data and calling pytest.skip() or pytest.fail() according to what is present. See step_b in the example below for an illustration.

Warning

The @depends_on decorator is only effective if the decorated step function is used 'as is' as an argument in @test_steps(). If a non-direct relation is used, such as using the test step name as argument, you should use a shared data container (see below) to manually create the dependency.

c- Shared data¶

In this explicit mode, by default all steps are independent, therefore they do not have access to each other's execution results. To solve this problem, you can add a steps_data argument to your test function. If you do so, a StepsDataHolder object will be injected in this variable, that you can use to store and retrieve results. Simply create fields on it and store whatever you like:

d- Calling decorated functions manually¶

In 'explicit' mode it is possible to call your test functions outside of pytest runners, exactly the same way we saw in generator mode.

An exemple can be found here.

e- Compliance with pytest¶

You can add as many @pytest.mark.parametrize and pytest fixtures in your test suite function, it should work as expected: a newsteps_data object will be created everytime a new parameter/fixture combination is created, and that object will be shared across steps with the same parameters and fixtures.

Concerning fixtures,

- by default all function-scoped fixtures will be 'one per step' in this mode (you do not even need to use the

@one_fixture_per_stepdecorator - although it does not hurt). - if you wish a fixture to be shared across several steps, decorate it with

@cross_steps_fixture.

For example

3. Usage with pytest-harvest¶

a- Enhancing the results df¶

You might already use pytest-harvest to turn your tests into functional benchmarks. When you combine it with pytest_steps you end up with one row in the synthesis table per step. For example:

| test_id | status | duration_ms | _step_name | algo_param | dataset | accuracy |

|---|---|---|---|---|---|---|

| test_my_app_bench[A-1-train] | passed | 2.00009 | train | 1 | my dataset #A | 0.832642 |

| test_my_app_bench[A-1-score] | passed | 0 | score | 1 | my dataset #A | nan |

| test_my_app_bench[A-2-train] | passed | 1.00017 | train | 2 | my dataset #A | 0.0638134 |

| test_my_app_bench[A-2-score] | passed | 0.999928 | score | 2 | my dataset #A | nan |

| test_my_app_bench[B-1-train] | passed | 0 | train | 1 | my dataset #B | 0.870705 |

| test_my_app_bench[B-1-score] | passed | 0 | score | 1 | my dataset #B | nan |

| test_my_app_bench[B-2-train] | passed | 0 | train | 2 | my dataset #B | 0.764746 |

| test_my_app_bench[B-2-score] | passed | 1.0004 | score | 2 | my dataset #B | nan |

You might wish to use the provided handle_steps_in_results_df utility method to replace the index with a 2-level multiindex (test id without step, step id).

b- Pivoting the results df¶

If you prefer to see one row per test and the step details in columns, this package also provides NEW default [module/session]_results_df_steps_pivoted fixtures to directly get the pivoted version ; and a pivot_steps_on_df utility method to perform the pivot transform easily.

You will for example obtain this kind of pivoted table:

| test_id | algo_param | dataset | train/status | train/duration_ms | train/accuracy | score/status | score/duration_ms |

|---|---|---|---|---|---|---|---|

| test_my_app_bench[A-1] | 1 | my dataset #A | passed | 2.00009 | 0.832642 | passed | 0 |

| test_my_app_bench[A-2] | 2 | my dataset #A | passed | 1.00017 | 0.0638134 | passed | 0.999928 |

| test_my_app_bench[B-1] | 1 | my dataset #B | passed | 0 | 0.870705 | passed | 0 |

| test_my_app_bench[B-2] | 2 | my dataset #B | passed | 0 | 0.764746 | passed | 1.0004 |

c- Examples¶

Two examples are available that should be quite straightforward for those familiar with pytest-harvest:

- here an example relying on default fixtures, to show how simple it is to satisfy the most common use cases.

- here an advanced example where the custom synthesis is created manually from the dictionary provided by pytest-harvest, thanks to helper methods.

Main features / benefits¶

- Split tests into steps. Although the best practices in testing are very much in favor of having each test completely independent of the other ones (for example for distributed execution), there is definitely some value in results readability to break down tests into chained sub-tests (steps). The

@test_stepsdecorator provides an intuitive way to do that without forcing any data model (steps can be functions, objects, etc.). - Multi-style: an explicit mode and a generator mode are supported, developers may wish to use one or the other depending on their coding style or readability target.

- Steps can share data- In generator mode this is out-of-the-box. In explicit mode all steps in the same test suite can share data through the injected

steps_datacontainer (name is configurable). - Steps dependencies can be defined: a

@depends_ondecorator (explicit mode) or anoptional_stepcontext manager (generator mode) allow you to specify that a given test step should be skipped or failed if its dependencies did not complete.

See Also¶

- pytest-cases, to go further and separate test data from test functions

Others¶

Python Pytest Selenium Clear Ie Cache

Python Pytest Coverage

Do you like this library ? You might also like my other python libraries

Python Pytest Mock

Want to contribute ?¶

Details on the github page: https://github.com/smarie/python-pytest-steps